티스토리 뷰

GitHub - ivangrov/Android-Deep-Learning-with-OpenCV: A repository with code that accompanies a series of videos I have made on Y

A repository with code that accompanies a series of videos I have made on YouTube about Deep Learning on Android - GitHub - ivangrov/Android-Deep-Learning-with-OpenCV: A repository with code that a...

github.com

처음엔 위 링크를 참고하여 사물 검출을 시도했으나 openCV 4.7.0 버전에선 동작하지 않았다.

검색 결과 openCV 3.4.5 이후 버전에선 동작하지 않는 게 맞다고 하는데, 다운그레이드 하니 Cmake빌드 과정에서 새로운 오류가 생겨 해결하지 못하고 다시 openCV4.7.0으로 돌아왔다...

앞으로의 과정은 모두

https://webnautes.tistory.com/1087?category=704164

OpenCV와 NDK를 사용하여 Android에서 Face Detection(얼굴 검출)

OpenCV 배포시 포함되어 있는 얼굴 검출 C++코드를 NDK를 이용하여 Android에서 동작하도록 수정하였습니다. 안드로이드 + NDK 카메라 기본코드에 단순히 C++코드만 옮겨오면 되는 줄 알았는데 고려해

webnautes.tistory.com

링크를 참고하는 것이며, openCV4.7.0 모듈이 설치됨을 전제로 한다.

1.app / src / main 아래에 새로운 디렉토리 assets 생성

2.아래 링크를 각각 클릭하여 해당 페이지로 이동하면, Ctrl + S를 눌러서 파일을 저장

(크롬 웹브라우저에서만 가능)

Ctrl키를 누른채 파일을 드래그하여 프로젝트 패널의 assets 디렉토리 위에서 왼쪽 마우스 버튼을 뗀 후, Refactor 버튼을 클릭하면 복사가 된다.

3.AndroidManifest.xml 파일에 외부 저장소 접근 권한을 추가해준다.

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

API 29이상이라면 아래도 추가

android:requestLegacyExternalStorage="true"

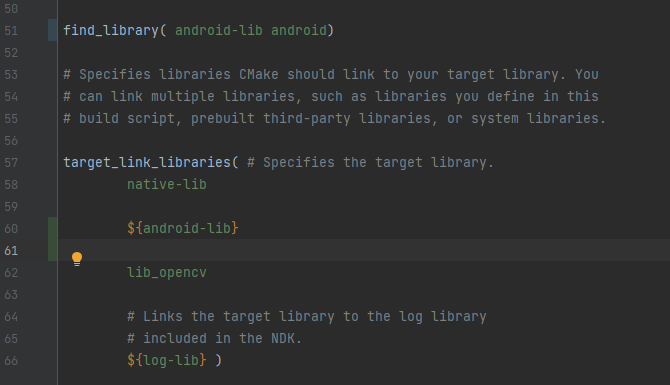

4.cpp 파일 안의 CMakeLists.txt 파일 수정

Sync Now 클릭

4.MainActivity 수정

package com.example.myapplication;

import androidx.appcompat.app.AlertDialog;

import androidx.appcompat.app.AppCompatActivity;

import android.content.DialogInterface;

import android.content.Intent;

import android.content.res.AssetManager;

import android.net.Uri;

import android.os.Bundle;

import android.annotation.TargetApi;

import android.content.pm.PackageManager;

import android.os.Build;

import android.os.Environment;

import android.util.Log;

import android.view.SurfaceView;

import android.view.View;

import android.view.WindowManager;

import android.widget.Button;

import org.opencv.android.BaseLoaderCallback;

import org.opencv.android.CameraBridgeViewBase;

import org.opencv.android.LoaderCallbackInterface;

import org.opencv.android.OpenCVLoader;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import java.io.File;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.io.OutputStream;

import java.util.Collections;

import java.util.List;

import java.util.concurrent.Semaphore;

import static android.Manifest.permission.CAMERA;

import static android.Manifest.permission.WRITE_EXTERNAL_STORAGE;

public class MainActivity extends AppCompatActivity

implements CameraBridgeViewBase.CvCameraViewListener2 {

private static final String TAG = "opencv";

private Mat matInput;

private Mat matResult;

private CameraBridgeViewBase mOpenCvCameraView;

//public native void ConvertRGBtoGray(long matAddrInput, long matAddrResult);

public native long loadCascade(String cascadeFileName );//우클릭하여 함수 생성 필요

public native void detect(long cascadeClassifier_face,

long cascadeClassifier_eye, long matAddrInput, long matAddrResult);//우클릭하여 함수 생성 필요

public long cascadeClassifier_face = 0;

public long cascadeClassifier_eye = 0;

static {

System.loadLibrary("opencv_java4");

System.loadLibrary("native-lib");

}

private final Semaphore writeLock = new Semaphore(1);

public void getWriteLock() throws InterruptedException {

writeLock.acquire();

}

public void releaseWriteLock() {

writeLock.release();

}

//파일을 가져오기 위한 메서드

private void copyFile(String filename) {

AssetManager assetManager = this.getAssets();

File outputFile = new File( getFilesDir() + "/" + filename );

InputStream inputStream = null;

OutputStream outputStream = null;

try {

Log.d( TAG, "copyFile :: 다음 경로로 파일복사 "+ outputFile.toString());

inputStream = assetManager.open(filename);

outputStream = new FileOutputStream(outputFile);

byte[] buffer = new byte[1024];

int read;

while ((read = inputStream.read(buffer)) != -1) {

outputStream.write(buffer, 0, read);

}

inputStream.close();

inputStream = null;

outputStream.flush();

outputStream.close();

outputStream = null;

} catch (Exception e) {

Log.d(TAG, "copyFile :: 파일 복사 중 예외 발생 "+e.toString() );

}

}

private void read_cascade_file(){

copyFile("haarcascade_frontalface_alt.xml");

copyFile("haarcascade_eye_tree_eyeglasses.xml");

Log.d(TAG, "read_cascade_file:");

cascadeClassifier_face = loadCascade( getFilesDir().getAbsolutePath() + "/haarcascade_frontalface_alt.xml");

Log.d(TAG, "read_cascade_file:");

cascadeClassifier_eye = loadCascade( getFilesDir().getAbsolutePath() +"/haarcascade_eye_tree_eyeglasses.xml");

}

private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

@Override

public void onManagerConnected(int status) {

switch (status) {

case LoaderCallbackInterface.SUCCESS:

{

mOpenCvCameraView.enableView();

} break;

default:

{

super.onManagerConnected(status);

} break;

}

}

};

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

getWindow().setFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN,

WindowManager.LayoutParams.FLAG_FULLSCREEN);

getWindow().setFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON,

WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

setContentView(R.layout.activity_main);

mOpenCvCameraView = (CameraBridgeViewBase)findViewById(R.id.activity_surface_view);

mOpenCvCameraView.setVisibility(SurfaceView.VISIBLE);

mOpenCvCameraView.setCvCameraViewListener(this);

mOpenCvCameraView.setCameraIndex(1); // front-camera(1), back-camera(0)

//버튼 클릭시 사진을 저장하기 위한 코드

Button button = (Button)findViewById(R.id.button);

button.setOnClickListener(new View.OnClickListener() {

public void onClick(View v) {

try {

getWriteLock();

File path = new File(String.valueOf(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_PICTURES)));

path.mkdirs();

File file = new File(path, "image.jpg");

String filename = file.toString();

Imgproc.cvtColor(matResult, matResult, Imgproc.COLOR_BGR2RGBA);

boolean ret = Imgcodecs.imwrite( filename, matResult);

if ( ret ) Log.d(TAG, "SUCESS");

else Log.d(TAG, "FAIL");

Intent mediaScanIntent = new Intent( Intent.ACTION_MEDIA_SCANNER_SCAN_FILE);

mediaScanIntent.setData(Uri.fromFile(file));

sendBroadcast(mediaScanIntent);

} catch (InterruptedException e) {

e.printStackTrace();

}

releaseWriteLock();

}

});

}

@Override

public void onPause()

{

super.onPause();

if (mOpenCvCameraView != null)

mOpenCvCameraView.disableView();

}

@Override

public void onResume()

{

super.onResume();

if (!OpenCVLoader.initDebug()) {

Log.d(TAG, "onResume :: Internal OpenCV library not found.");

OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_3_2_0, this, mLoaderCallback);

} else {

Log.d(TAG, "onResum :: OpenCV library found inside package. Using it!");

mLoaderCallback.onManagerConnected(LoaderCallbackInterface.SUCCESS);

}

}

public void onDestroy() {

super.onDestroy();

if (mOpenCvCameraView != null)

mOpenCvCameraView.disableView();

}

@Override

public void onCameraViewStarted(int width, int height) {

}

@Override

public void onCameraViewStopped() {

}

//세마포어 사용

@Override

public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

try {

getWriteLock();

matInput = inputFrame.rgba();

if ( matResult == null )

matResult = new Mat(matInput.rows(), matInput.cols(), matInput.type());

Core.flip(matInput, matInput, 1);

detect(cascadeClassifier_face,cascadeClassifier_eye, matInput.getNativeObjAddr(),

matResult.getNativeObjAddr());

} catch (InterruptedException e) {

e.printStackTrace();

}

releaseWriteLock();

return matResult;

}

protected List<? extends CameraBridgeViewBase> getCameraViewList() {

return Collections.singletonList(mOpenCvCameraView);

}

//여기서부턴 퍼미션 관련 메소드

private static final int CAMERA_PERMISSION_REQUEST_CODE = 200;

protected void onCameraPermissionGranted() {

List<? extends CameraBridgeViewBase> cameraViews = getCameraViewList();

if (cameraViews == null) {

return;

}

for (CameraBridgeViewBase cameraBridgeViewBase: cameraViews) {

if (cameraBridgeViewBase != null) {

cameraBridgeViewBase.setCameraPermissionGranted();

read_cascade_file();

}

}

}

protected void onStart() {

super.onStart();

boolean havePermission = true;

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.M) {

if (checkSelfPermission(CAMERA) != PackageManager.PERMISSION_GRANTED

|| checkSelfPermission(WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED

) {

requestPermissions(new String[]{CAMERA, WRITE_EXTERNAL_STORAGE}, CAMERA_PERMISSION_REQUEST_CODE);

havePermission = false;

}

}

if (havePermission) {

onCameraPermissionGranted();

}

}

@Override

@TargetApi(Build.VERSION_CODES.M)

public void onRequestPermissionsResult(int requestCode, String[] permissions, int[] grantResults) {

if (requestCode == CAMERA_PERMISSION_REQUEST_CODE && grantResults.length > 0

&& grantResults[0] == PackageManager.PERMISSION_GRANTED

&& grantResults[1] == PackageManager.PERMISSION_GRANTED) {

onCameraPermissionGranted();

}else{

showDialogForPermission("앱을 실행하려면 퍼미션을 허가하셔야합니다.");

}

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

}

@TargetApi(Build.VERSION_CODES.M)

private void showDialogForPermission(String msg) {

AlertDialog.Builder builder = new AlertDialog.Builder( MainActivity.this);

builder.setTitle("알림");

builder.setMessage(msg);

builder.setCancelable(false);

builder.setPositiveButton("예", new DialogInterface.OnClickListener() {

public void onClick(DialogInterface dialog, int id){

requestPermissions(new String[]{CAMERA, WRITE_EXTERNAL_STORAGE}, CAMERA_PERMISSION_REQUEST_CODE);

}

});

builder.setNegativeButton("아니오", new DialogInterface.OnClickListener() {

public void onClick(DialogInterface arg0, int arg1) {

finish();

}

});

builder.create().show();

}

}JNI함수는 우클릭을 통해서 native-lib.cpp 위치를 선택해주면 자동으로 만들어진다.

5.native-lib.cpp파일 수정

#include <jni.h>

//#include <string>

#include <opencv2/opencv.hpp>

#include <android/log.h>

using namespace std;

using namespace cv;

float resize(Mat img_src, Mat &img_resize, int resize_width){

float scale = resize_width / (float)img_src.cols ;

if (img_src.cols > resize_width) {

int new_height = cvRound(img_src.rows * scale);

resize(img_src, img_resize, Size(resize_width, new_height));

}

else {

img_resize = img_src;

}

return scale;

}

//extern "C"

//JNIEXPORT void JNICALL

//Java_com_tistory_webnautes_useopencvwithcmake_MainActivity_ConvertRGBtoGray(JNIEnv *env,

// jobject thiz,

// jlong mat_addr_input,

// jlong mat_addr_result) {

// // TODO: implement ConvertRGBtoGray()

// Mat &matInput = *(Mat *)mat_addr_input;

// Mat &matResult = *(Mat *)mat_addr_result;

//

// cvtColor(matInput, matResult, COLOR_RGBA2GRAY);

//}

extern "C"

JNIEXPORT jlong JNICALL

Java_com_tistory_webnautes_useopencvwithcmake_MainActivity_loadCascade(JNIEnv *env, jobject thiz, jstring cascade_file_name) {

const char *nativeFileNameString = env->GetStringUTFChars(cascade_file_name, 0);

string baseDir("");

baseDir.append(nativeFileNameString);

const char *pathDir = baseDir.c_str();

jlong ret = 0;

ret = (jlong) new CascadeClassifier(pathDir);

if (((CascadeClassifier *) ret)->empty()) {

__android_log_print(ANDROID_LOG_DEBUG, "native-lib :: ",

"CascadeClassifier로 로딩 실패 %s", nativeFileNameString);

}

else

__android_log_print(ANDROID_LOG_DEBUG, "native-lib :: ",

"CascadeClassifier로 로딩 성공 %s", nativeFileNameString);

env->ReleaseStringUTFChars(cascade_file_name, nativeFileNameString);

return ret;

}

extern "C"

JNIEXPORT void JNICALL

Java_com_tistory_webnautes_useopencvwithcmake_MainActivity_detect(JNIEnv *env, jobject thiz,

jlong cascade_classifier_face,

jlong cascade_classifier_eye,

jlong mat_addr_input,

jlong mat_addr_result) {

Mat &img_input = *(Mat *) mat_addr_input;

Mat &img_result = *(Mat *) mat_addr_result;

img_result = img_input.clone();

std::vector<Rect> faces;

Mat img_gray;

cvtColor(img_input, img_gray, COLOR_BGR2GRAY);

equalizeHist(img_gray, img_gray);

Mat img_resize;

float resizeRatio = resize(img_gray, img_resize, 640);

//-- Detect faces

((CascadeClassifier *) cascade_classifier_face)->detectMultiScale( img_resize, faces, 1.1, 2, 0|CASCADE_SCALE_IMAGE, Size(30, 30) );

__android_log_print(ANDROID_LOG_DEBUG, (char *) "native-lib :: ",

(char *) "face %d found ", faces.size());

for (int i = 0; i < faces.size(); i++) {

double real_facesize_x = faces[i].x / resizeRatio;

double real_facesize_y = faces[i].y / resizeRatio;

double real_facesize_width = faces[i].width / resizeRatio;

double real_facesize_height = faces[i].height / resizeRatio;

Point center( real_facesize_x + real_facesize_width / 2, real_facesize_y + real_facesize_height/2);

ellipse(img_result, center, Size( real_facesize_width / 2, real_facesize_height / 2), 0, 0, 360,

Scalar(255, 0, 255), 30, 8, 0);

Rect face_area(real_facesize_x, real_facesize_y, real_facesize_width,real_facesize_height);

Mat faceROI = img_gray( face_area );

std::vector<Rect> eyes;

//-- In each face, detect eyes

((CascadeClassifier *) cascade_classifier_eye)->detectMultiScale( faceROI, eyes, 1.1, 2, 0 |CASCADE_SCALE_IMAGE, Size(30, 30) );

for ( size_t j = 0; j < eyes.size(); j++ )

{

Point eye_center( real_facesize_x + eyes[j].x + eyes[j].width/2, real_facesize_y + eyes[j].y + eyes[j].height/2 );

int radius = cvRound( (eyes[j].width + eyes[j].height)*0.25 );

circle( img_result, eye_center, radius, Scalar( 255, 0, 0 ), 30, 8, 0 );

}

}

}여기서 cpp코드를 이용하는 것이기 때문에, ndk가 필요하다.

결과

유나 얼굴 탐지 성공!!!

+

중요!!

fatal signal 5 오류의 경우

native-lib 파일 코드를 그냥 복붙하면 안되고 꼭 JNI 함수 생성 후에 맞는 내용으로 갖다붙여야 한다.

CmakeLists.txt 내용도 꼼꼼히 체크하지 않아서 오류가 나는 경우도 많은 것 같다.

++

cpp형식의 다른 openCV코드 또한 이처럼 적용하면 된다고 하므로 여기저기 활용할 수 있을 것 같다.

하지만 원하는 openCV코드가 대부분 파이썬 형태여서 공부가 더 필요할 것 같다....

'프로젝트 > 안드로이드스튜디오' 카테고리의 다른 글

| 안드로이드 스튜디오 카메라 화면 캡처하기 (0) | 2023.05.09 |

|---|---|

| 서버에 이미지를 보낼 때, 파일? 바이트 코드? (0) | 2023.05.09 |